In the realm of optimizing the stochastic gradient descent (SGD) algorithm, the choice of step size, also known as the learning rate, is crucial. Various strategies have been developed to enhance the performance of SGD, with recent advancements focusing on the distribution of step sizes. One of the challenges faced with existing step size methods is the tendency to assign extremely low values to the final iterations, impacting the overall efficiency of the algorithm.

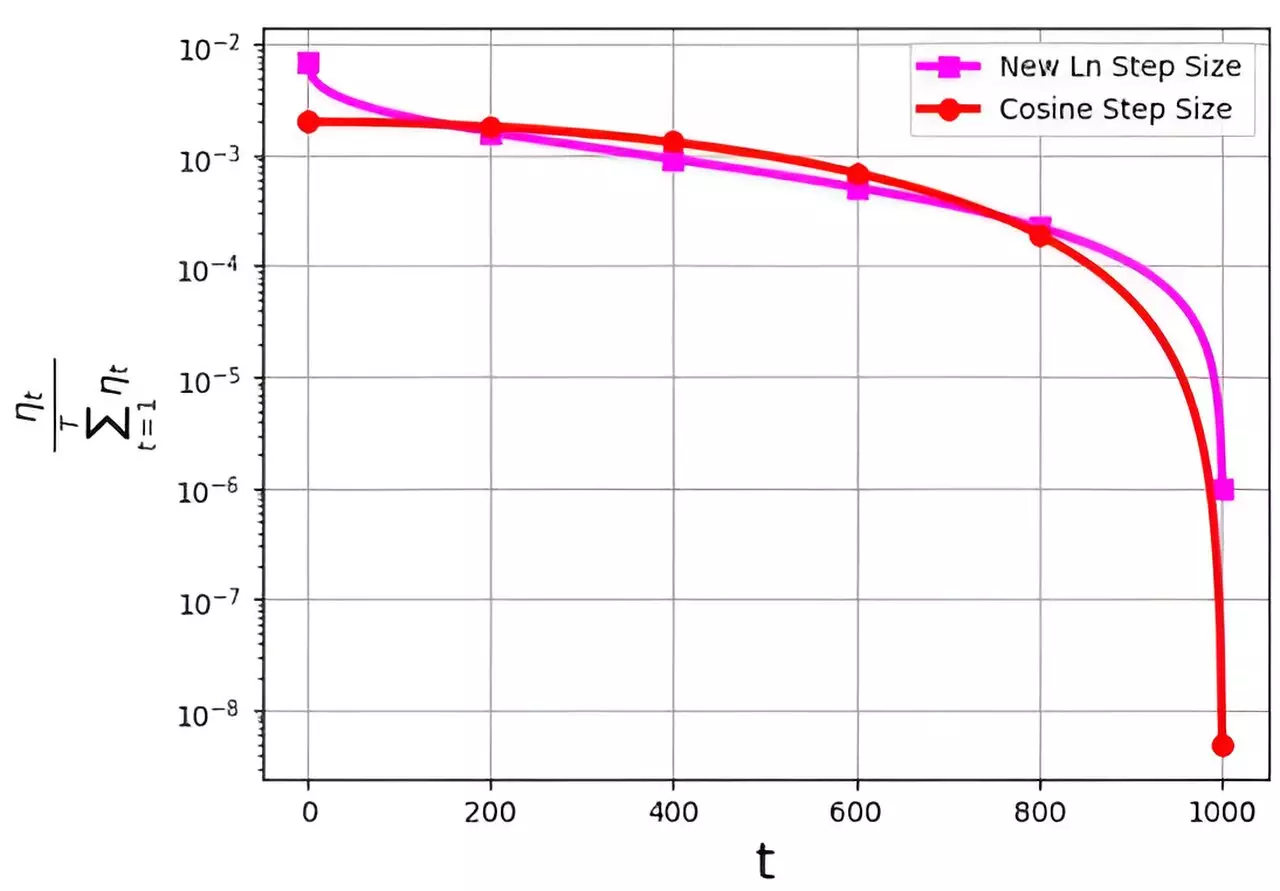

A team of researchers led by M. Soheil Shamaee conducted a study published in Frontiers of Computer Science, introducing a novel logarithmic step size for SGD. This new approach has demonstrated superior performance, particularly in the later iterations of the algorithm. By increasing the probability of selection for the new step size in the concluding stages, it outperforms the traditional cosine step size method, which struggles with assigning adequate values in the final iterations.

The numerical results from the study showcase the effectiveness of the logarithmic step size on datasets such as FashionMinst, CIFAR10, and CIFAR100. Notably, when used in conjunction with a convolutional neural network (CNN) model, the logarithmic step size yielded a substantial 0.9% improvement in test accuracy for the CIFAR100 dataset. These findings validate the significance of incorporating logarithmic step size in optimizing SGD performance across different datasets.

Implications for Machine Learning

The introduction of the logarithmic step size method represents a significant advancement in the field of machine learning optimization. By addressing the limitations of existing step size strategies, the new approach offers a more robust and efficient solution for training neural networks. The enhanced performance of SGD with logarithmic step size opens up possibilities for further improvements in model accuracy and convergence speed, ultimately benefiting various machine learning applications.

The research conducted by M. Soheil Shamaee and the team sheds light on the importance of step size selection in optimizing the stochastic gradient descent algorithm. The introduction of logarithmic step size has demonstrated superior performance in the final iterations, surpassing the conventional cosine step size method. The significant improvements in test accuracy observed across different datasets underscore the potential of logarithmic step size in enhancing machine learning algorithms. As the field continues to evolve, incorporating innovative approaches like logarithmic step size will be instrumental in advancing the capabilities of neural networks and deep learning systems.

Leave a Reply