In recent years, deep learning techniques have made significant strides in achieving human-level accuracy across various tasks such as image classification and natural language processing. This progress has spurred a wave of research into developing new hardware solutions capable of meeting the computational demands of these advanced techniques.

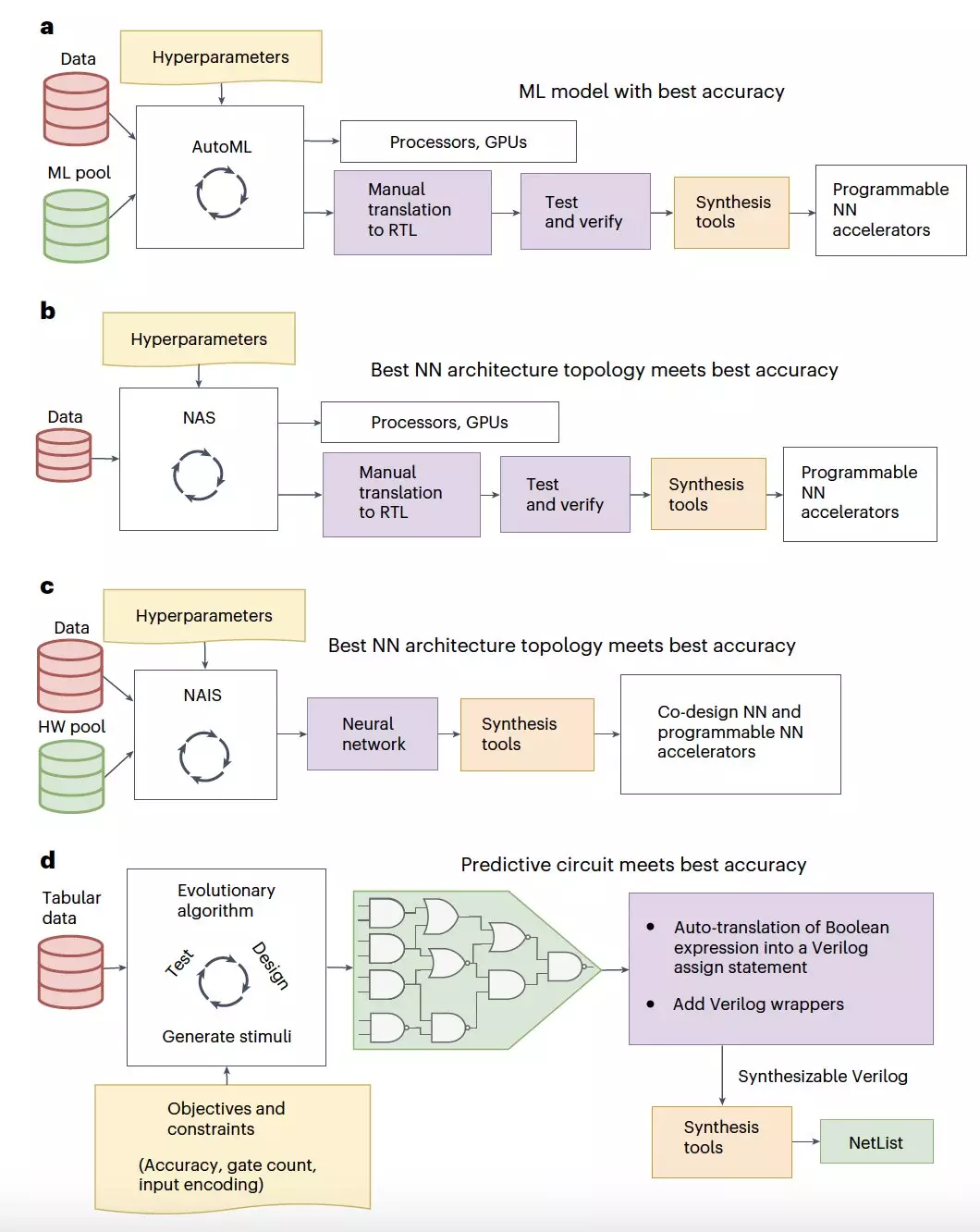

One approach that researchers have taken to run deep neural networks more efficiently is the development of hardware accelerators. These specialized computing devices can be programmed to handle specific computational tasks with greater efficiency than traditional CPUs. While the design of these accelerators has typically been carried out independently from the training and execution of deep learning models, a few research teams have started to address these two objectives simultaneously.

A team of researchers from the University of Manchester and Pragmatic Semiconductor recently introduced a groundbreaking method for generating classification circuits from tabular data. This unstructured data combines numerical and categorical information and their proposed method, known as “tiny classifiers,” is outlined in a paper published in Nature Electronics.

The researchers, led by Konstantinos Iordanou and Timothy Atkinson, developed tiny classifier circuits comprising only a few hundred logic gates. Despite their compact size, these circuits were able to achieve accuracy levels comparable to state-of-the-art machine learning classifiers. Their methodology involves an evolutionary algorithm that optimizes the predictor circuits for tabular data classification, utilizing no more than 300 logic gates.

Through simulations and real-world testing on a low-cost integrated circuit, the researchers demonstrated the efficacy of their tiny classifier circuits. These circuits showed promising results in terms of accuracy and power consumption, outperforming the best-performing machine learning baselines. In silicon chip simulations, the tiny classifiers used significantly less area and power, indicating their potential for efficient deployment in various applications.

Looking ahead, the tiny classifiers developed by the research team have the potential to revolutionize a wide range of real-world tasks. These circuits could be used as triggering mechanisms on chips for smart packaging and monitoring of goods, as well as in the development of low-cost near-sensor computing systems. Their compact size and efficient performance make them ideal for applications where space and energy consumption are critical factors.

The integration of hardware accelerators and novel methodologies like tiny classifiers in deep learning represents a significant step forward in optimizing computational efficiency and performance. As technology continues to evolve, these innovations have the potential to drive further advancements in artificial intelligence and machine learning applications.

Leave a Reply