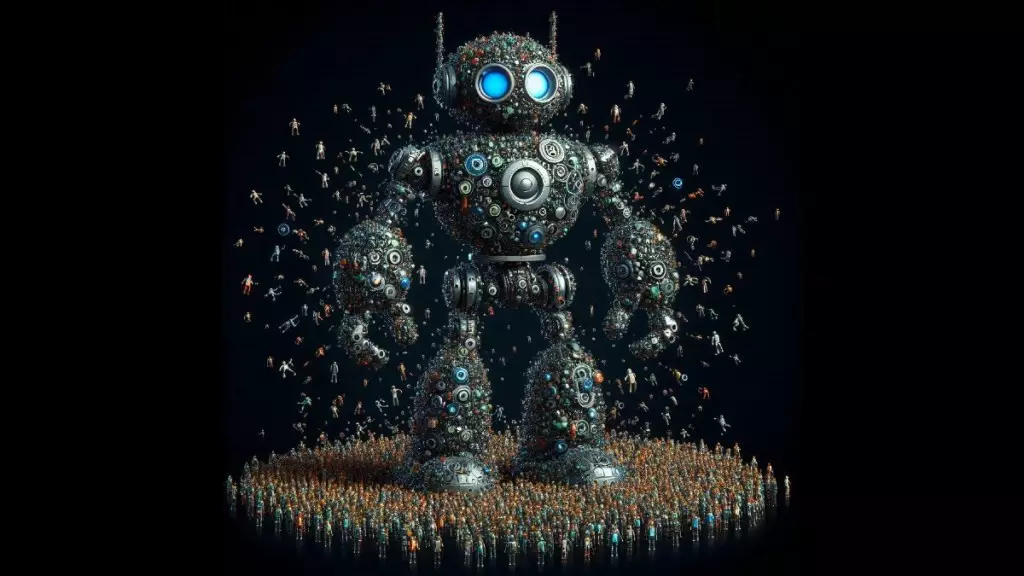

Mixture-of-Experts (MoE) has revolutionized the field of large language models (LLMs) by offering a scalable solution to the computational challenges faced by traditional models. By diverting data to specialized “expert” modules instead of utilizing the entire model capacity for every input, MoE has enabled LLMs to expand their parameter count while keeping inference costs at a minimum.

Despite the success of MoE in popular LLMs such as Mixtral, DBRX, Grok, and reportedly GPT-4, existing MoE techniques are bound by limitations that confine them to a relatively small number of experts. This constraint hinders the full potential of these models and restricts their scalability.

Google DeepMind’s recent paper introduces Parameter Efficient Expert Retrieval (PEER), a groundbreaking architecture that overcomes the limitations of current MoE techniques by scaling MoE models to millions of experts. PEER enhances the performance-compute tradeoff of large language models, paving the way for enhanced capabilities and efficiency.

Within every transformer block of LLMs lie attention layers and feedforward (FFW) layers. The attention layer is responsible for computing the relationships between input tokens, while the feedforward network stores the model’s knowledge. FFW layers constitute a significant portion of a model’s parameters, presenting a bottleneck in scaling transformers.

The Mechanism of MoE

MoE addresses the challenge posed by FFW layers by replacing them with sparsely activated expert modules. These experts, each containing a fraction of the parameters of a full dense layer, specialize in distinct areas. Additionally, a router within the MoE assigns inputs to experts likely to provide accurate answers, maximizing the model’s efficiency.

Research indicates that the optimal number of experts in an MoE model is influenced by various factors, such as training tokens and compute budget. Higher granularity in MoE models, achieved by increasing the number of experts, has proven to enhance performance, facilitate knowledge acquisition, and adapt models to evolving data streams.

The Innovation of PEER in Scaling MoE

PEER transcends the limitations of traditional MoE architectures by introducing a learned index to efficiently route input data to a vast pool of experts. By utilizing tiny experts with a single neuron in the hidden layer and a multi-head retrieval approach, PEER elevates model efficiency, knowledge transfer, and parameter utilization.

PEER’s potential application in DeepMind’s Gemini 1.5 models signifies a significant advancement in the realm of large language models. By surpassing the performance-compute tradeoff of existing models, PEER exemplifies a promising alternative to dense feedforward layers, opening doors to more efficient and cost-effective LLM training and deployment.

The emergence of Parameter Efficient Expert Retrieval (PEER) marks a groundbreaking evolution in the realm of Mixture-of-Experts (MoE) architectures within large language models (LLMs). By surmounting the constraints of traditional MoE techniques and offering a scalable solution to model expansion, PEER sets a new benchmark for efficiency, performance, and innovation in the field of language modeling.

Leave a Reply