As large language models (LLMs) become ever more integral to a myriad of applications, understanding how to customize them for specialized tasks is crucial for developers. Traditional methods like fine-tuning and the emerging trend of in-context learning (ICL) are at the forefront of this customization journey. Recent research conducted by experts from Google DeepMind and Stanford University has revealed groundbreaking insights into the strengths and weaknesses of these methodologies, presenting a compelling case for a novel hybrid approach.

Fine-tuning is a method where a pre-trained LLM is trained further using a smaller dataset, allowing it to acquire specialized knowledge or skills relevant to a particular application. However, this comes with the downside of potentially forgetting some of the valuable pre-learned information. In contrast, ICL forgoes any alterations to the model’s internal parameters, instead employing examples within the input prompt to inform the LLM’s responses. While it offers greater flexibility and often superior generalization, the cost—both computationally and in terms of performance—is a critical factor that developers must consider.

Insights from Rigorous Testing

To comprehensively evaluate the generalization capabilities of fine-tuning and ICL, the researchers designed innovative synthetic datasets with complex structures, ensuring that the challenges posed to the models were entirely novel. By stripping all terms of identifiable meaning and replacing them with nonsensical placeholders, the models were rigorously tested on tasks like logical deductions and relationship reversals. For instance, asking whether the model could infer logical opposites or deduce relationships from provided facts tested their generalization capabilities.

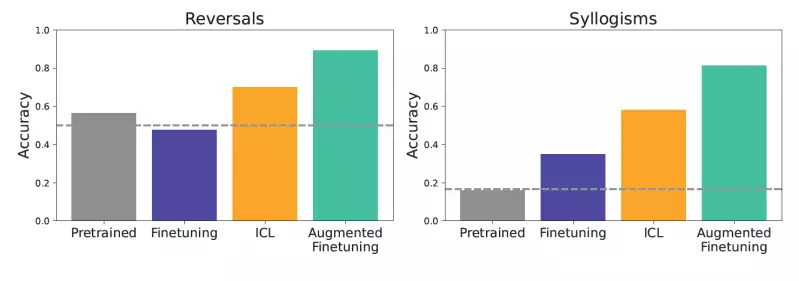

The findings were telling: ICL consistently outperformed standard fine-tuning, especially in complex inference tasks where contextual clues were pivotal. Models that solely relied on pre-training exhibited abysmal performance, confirming the importance of both fine-tuning and ICL in the evolution of LLM utility.

The Cost-Benefit Analysis of ICL Versus Fine-Tuning

One of the standout revelations from the research was the dual nature of ICL. While it boasts superior generalization potential, it is more computationally demanding during inference. This raises a crucial dilemma for developers: the immediate expense of computational power versus the long-term gains in model performance. The higher costs associated with ICL could deter companies looking to deploy LLMs at scale. However, the findings suggest that augmenting ICL with fine-tuning could be a game-changer.

By using in-context inferences to enrich fine-tuning datasets, models can harness the strengths of both methods without incurring the full cost of ICL in every instance. This innovative augmented fine-tuning approach allows models to be imbued with greater generalization capabilities, thus making the fine-tuning process not only more effective but also more adaptable to an organization’s specific needs.

The Dual Strategy of Augmented Fine-Tuning

The researchers examined two methods of data augmentation: a local strategy, which focuses on individual facts, and a global strategy, which uses the entire dataset as context. By prompting the LLMs to generate richer examples from existing data, they effectively placed the models in a position to expand their knowledge base further than merely rehashing what they learned during standard fine-tuning. As demonstrated in various tests, models subjected to augmented fine-tuning exhibited remarkable improvements over both traditional fine-tuning and straightforward ICL application.

This new methodology holds promise, especially for enterprises seeking to leverage LLMs for critical applications. The paradigm shift implies that companies can enhance their LLM deployments without the exorbitant costs tied to continuous ICL usage. Notably, the flexibility offered by augmented fine-tuning makes it a viable option for developers keen on maintaining performance while also managing resource allocation effectively.

The Path Forward for AI Development

While the study underscores the potential of augmented fine-tuning, it’s important to recognize that it isn’t a panacea. Further explorations into how these techniques interact across various domains will be vital as the field of artificial intelligence continues its rapid evolution. Developers and researchers alike must engage in ongoing dialogue about best practices, potential pitfalls, and the long-term implications of deploying these sophisticated models in real-world scenarios.

Ultimately, as AI continues to revolutionize multiple sectors, optimizing LLM customization methods like augmented fine-tuning will play a pivotal role in shaping robust and capable AI applications. By understanding and harnessing these advanced techniques, developers can pave the way for the next generation of AI-driven solutions.

Leave a Reply