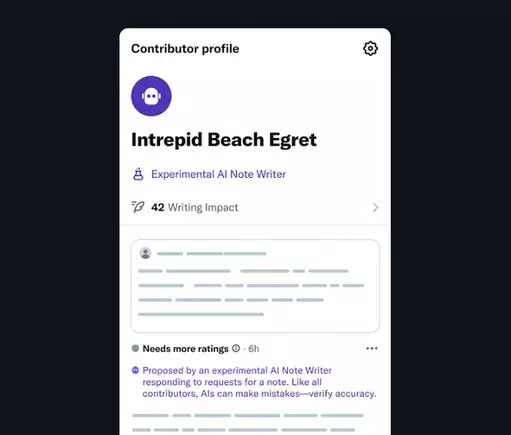

In the evolving landscape of social media, the integration of artificial intelligence into fact-checking signifies a remarkable leap forward. The recent announcement of AI Note Writers by X represents a bold move to augment human moderation efforts with automated systems capable of generating their own Community Notes. This shift not only accelerates the process of dispute resolution and clarification but also brings into question the authenticity and neutrality of information shared in digital discourse. Such a development underscores the potential of AI to serve as a catalyst for more efficient information verification, especially as online conversations become increasingly complex and fast-paced.

The underlying premise is straightforward yet ambitious: leverage AI to produce contextual, data-backed notes that can be swiftly presented to users, thereby fostering a more informed community. These bots, designed to focus on specific niches or elements, are intended to act as rapid-response agents, providing supplementary insights before human moderation takes over. While this approach offers promising benefits—speed, scale, and consistency—it simultaneously raises significant concerns about bias, perspective, and control, particularly when the AI’s output might reflect or be influenced by the ideological leanings of its developers or platform owners.

The Challenge of Bias and Ideological Influence

Despite the optimistic outlook, the introduction of AI Note Writers prompts a critical examination of who decides what constitutes “truth” in this new paradigm. Particularly on a platform like X, where the influence of powerful figures can shape content moderation policies, the risk of biased AI outputs looms large. Elon Musk’s recent public criticisms of his own AI bot, Grok, highlight a nuanced dilemma. Musk’s disapproval stemmed from the bot’s sourcing practices, which he deemed unreliable and biased towards certain media outlets like Media Matters and Rolling Stone. His subsequent promise to overhaul Grok’s data sources—filtering out what he considers politically incorrect but factually true content—raises questions about subjective influence over what information is deemed acceptable or legitimate.

If platform owners or developers manipulate datasets to align with specific ideological stances, the AI’s role shifts from impartial truth-teller to tool of narrative shaping. This would undermine the very purpose of AI-powered fact-checking, converting it into a mechanism that reinforces particular perspectives rather than presenting a balanced, evidence-based view. As AI becomes more integrated into community moderation, its capacity to be swayed by the biases of its creators or owners could jeopardize the integrity of online discourse, especially if such influences are not transparent or are selectively applied.

The Power Dynamics of Data and Control

The ongoing control over data sources is a pivotal aspect of this technology’s future trajectory. Decisions about which datasets the AI draws upon will directly influence the nature of the Notes produced. In Musk’s case, a potential future where the AI only references vetted sources aligned with his views would significantly limit the diversity of perspectives and compromise the objectivity that should underpin fact-checking processes. Such a scenario could foster echo chambers rather than challenge misinformation, ultimately eroding trust in the platform’s commitment to fair and unbiased truth verification.

However, there’s also an argument to be made about the necessity of filtering for accuracy and reliability. Not all sources are equal, and platforms might genuinely seek to prevent the spread of misinformation by limiting the influence of questionable data. The crux lies in transparency and accountability—how openly platforms communicate about their data curation methods and whether they allow for third-party oversight. Without these safeguards, AI-driven Notes risk entrenching existing power asymmetries and biases, rather than democratizing the verification process.

Implications for the Future of Online Discourse

The integration of AI into community moderation and fact-checking embodies both hope and peril. On one hand, automated systems can drastically improve the speed and scope of content verification, potentially curbing misinformation before it spreads widely. This could transform social media into a more trustworthy arena for discourse, especially if human oversight remains robust and independent. On the other hand, it raises serious concerns about censorship, ideological bias, and the monopolization of truth-claims by powerful actors.

Ultimately, the success of AI-enabled Community Notes hinges on a delicate balance. It must combine technological efficiency with unwavering standards of transparency and fairness. Without careful oversight, the technology risks becoming another tool for manipulation rather than enlightenment. While the promise of faster, more accurate fact-checking tools is alluring, the broader implications for free expression and democratic discourse cannot be ignored. As the landscape of social media continues to evolve, stakeholders must critically assess not only the capabilities of AI but also the motives and influences that shape its deployment.

Leave a Reply