In a striking move to bolster user privacy, Signal, the esteemed messaging platform renowned for its commitment to security, has unveiled a novel feature called Screen Security. Announced recently, this development seeks to counteract Microsoft’s controversial Recall feature, which has been the subject of intense scrutiny since its rollout. Recall, designed to serve as an on-device search history tool, continuously captures screenshots of user activity. While Microsoft intends to enhance user experience through such features, the inherent risks to privacy and data security have prompted alarm bells to ring across the tech community.

The Privacy Paradox in AI Development

The introduction of the Recall feature raises pressing ethical questions surrounding user privacy and autonomy. Initially presented as an AI tool to aid users in retrieving historical activity, its implementation characterized a troubling trend where user consent seemed an afterthought. Even after extensive backlash, Microsoft’s approach leaned heavily on the integration of AI without adequate privacy measures. Signal’s response to this potential invasion aligns with a significant push within the tech sector—one that advocates for greater accountability and user control over personal data.

While Microsoft has since adjusted the Recall feature to require user opt-in for its functionalities, concerns linger about how developers of third-party applications, like Signal, can safeguard their users against an operating system that appears less focused on privacy and more on acquiring data. Microsoft’s failure to provide app developers with robust tools to prevent OS-level AI systems from accessing potentially sensitive information is particularly troubling, marking a gap in responsibility that the tech giant has yet to satisfy.

A Proactive Move for User Security

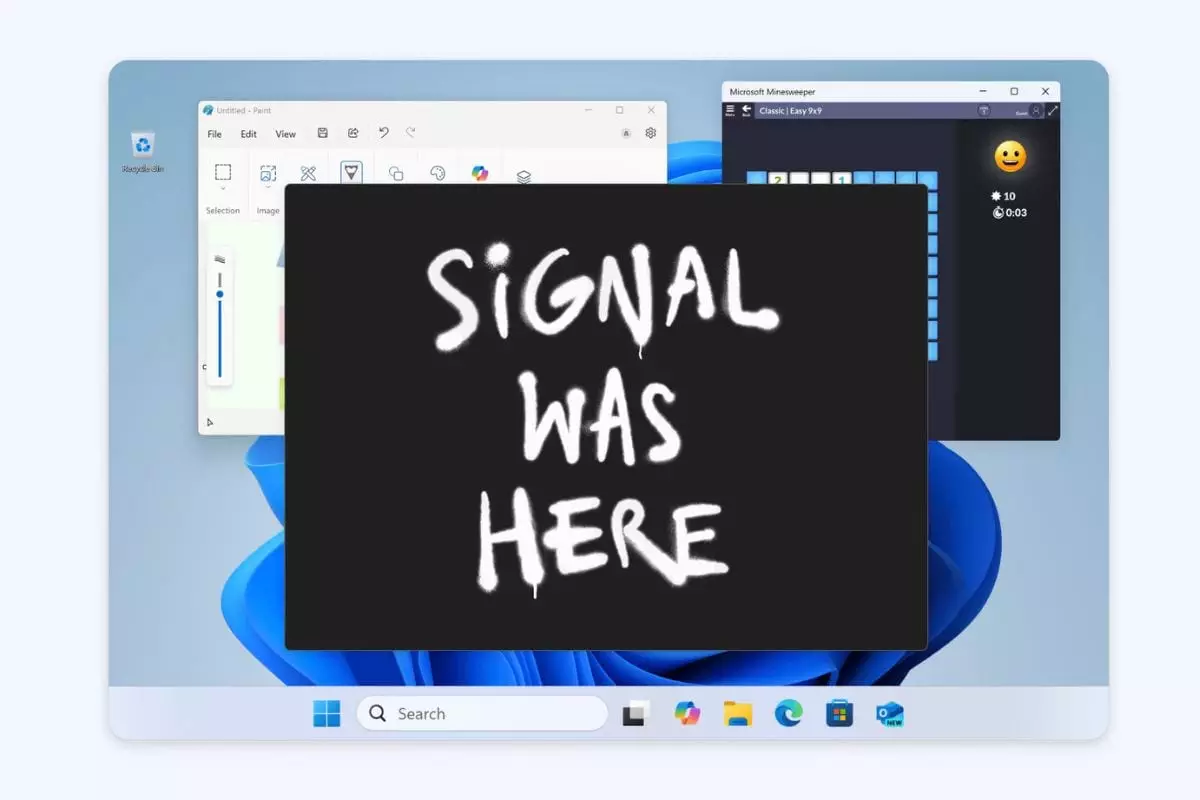

Signal’s implementation of the Screen Security feature—a Digital Rights Management (DRM) flag—exemplifies creative problem-solving in the face of potential privacy breaches. By preventing screenshots of its window on Windows 11 devices, Signal is setting a precedent about how applications should respond to overarching threats to user confidentiality. This DRM-like functionality draws parallels to methods used by streaming services that aim to secure their content from unauthorized access, thus marking a critical turn in how communication applications view security.

Furthermore, turning this feature on by default for Windows 11 users demonstrates a decisive stance by Signal, prioritizing privacy from the onset. However, such measures also raise concerns regarding usability; the company acknowledges that enabling Screen Security could complicate accessibility, particularly for individuals who rely on assistive technologies like screen readers. As a result, the implementation of this feature invites a careful consideration of the balance between security and accessibility, marking an ongoing challenge in the industry.

User Empowerment and Choices

Amidst these challenges, Signal stands firm in advocating for user autonomy. The company provides a straightforward means to deactivate Screen Security, thereby reinforcing its belief that users should have the ultimate say in their privacy preferences. The cautionary warning displayed when users contemplate turning off this feature—alerting them to the possible risks posed by Microsoft’s systems—serves to educate and empower users, ensuring they are fully informed of the implications of their choices.

This transparent approach underscores the necessity for both users and developers to engage in proactive conversations about privacy gears within technological developments. Signal’s stance leaves room for a larger dialogue about the responsibilities of tech giants and the ethical implications of AI implementation. A vulnerability exposed through Microsoft’s Recall feature serves as a clarion call for other tech developers to prioritize privacy, not merely as a regulatory checkbox but as a core principle of user-centric design.

Reflecting on Industry Standards

As Signal continues to innovate against the backdrop of looming privacy challenges, it urges developers creating potent AI features to consider the broader implications of their work. In its pointed call to action, Signal notes, “People who care about privacy shouldn’t be forced to sacrifice accessibility upon the altar of AI aspirations.” This resonates throughout the tech landscape, emphasizing that privacy and accessibility are not just ancillary concerns but integral to the design of modern applications.

The journey toward bolstering user privacy isn’t merely Signal’s responsibility; it is a collective endeavor that demands cooperation and accountability from all tech stakeholders. As the digital realm becomes increasingly interconnected, user trust hinges on a comprehensive acknowledgment of privacy rights, careful consideration of AI capabilities, and a commitment to making technology truly user-friendly.

Leave a Reply