In the realm of artificial intelligence and natural language processing, it’s not unusual to encounter complex questions that require more than just surface-level knowledge. Just like humans might call upon a friend who holds expertise in a certain field, large language models (LLMs) can benefit greatly from collaborative intelligence. Researchers at the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (CSAIL) are establishing a groundwork for such collaboration through an innovative method known as Co-LLM.

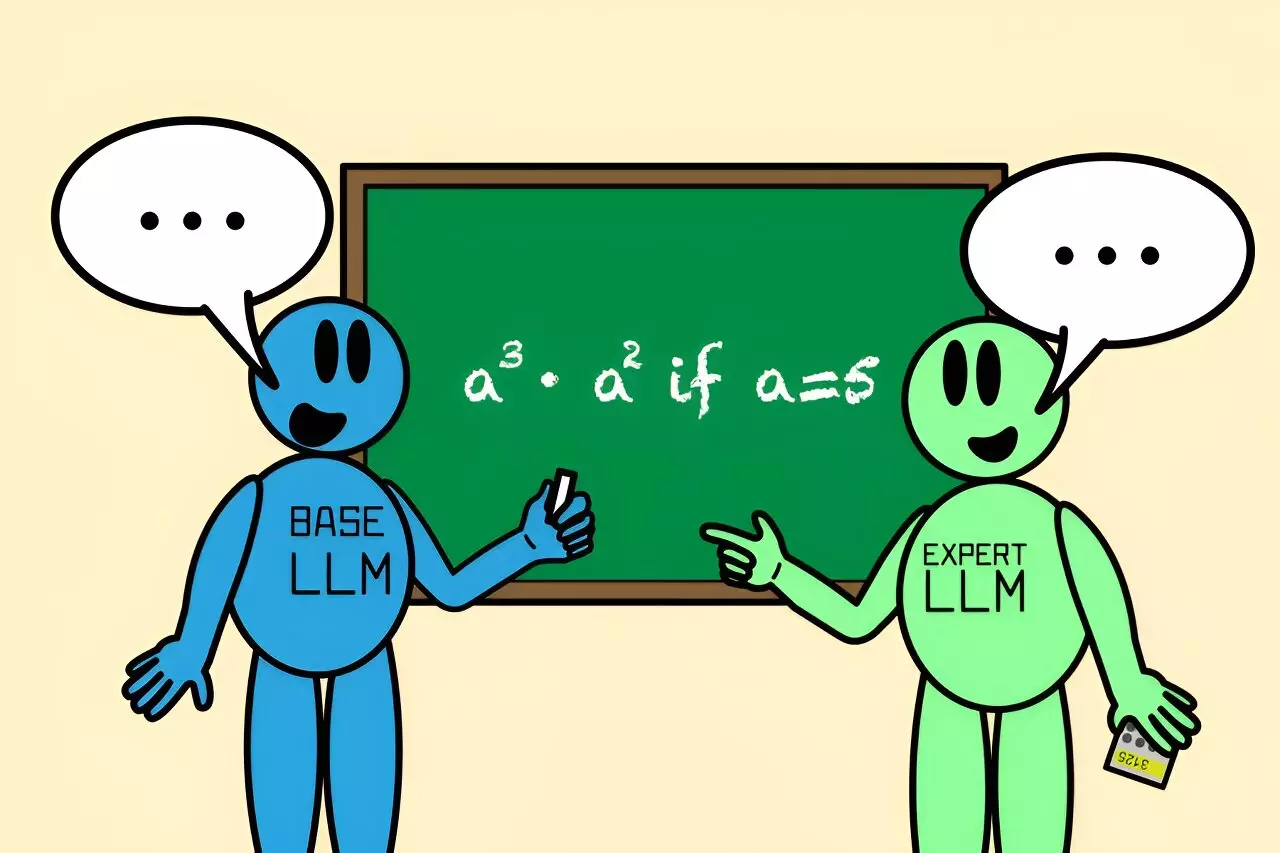

Co-LLM is not merely another algorithm; it’s a concept rooted in teamwork, aiming to boost the capability of LLMs by marrying a general-purpose model with a focused expert model. This partnership allows the base model to address various queries while simultaneously identifying areas that necessitate specialized knowledge. The result is a sophisticated system that improves the accuracy of responses, especially in areas that demand precision, like medical inquiries and complex mathematical problems.

At its core, Co-LLM operates on a simple principle—the core language model generates a response while simultaneously assessing its own accuracy. This unique architecture employs a “switch variable” akin to a project manager that facilitates real-time communication between the two models. As the general LLM constructs an answer, the switch evaluates each component to ascertain whether the answer should be sourced from the specialized expert model.

Take, for instance, a query regarding extinct species of bears. The general-purpose model begins constructing an answer, crafting preliminary responses until the switch activates, integrating more precise data from the expert model. This dynamic creates a seamless flow of information, optimizing output while reducing the computational workload by only involving the expert model when necessary.

The beauty of this collaboration lies in its efficiency. Unlike traditional models that rely on extensive computational resources simultaneously, Co-LLM engages the expert model on a token basis, reducing redundant processing and accelerating response generation.

Training this cutting-edge algorithm involves using domain-specific datasets to develop a nuanced understanding of where a general-purpose model’s strengths and weaknesses lie. The CSAIL researchers leverage targeted data to educate the base model on the expertise of the specialized model, effectively guiding it toward scenarios where collaboration would yield superior results.

For instance, using biomedical datasets, they equipped the general-purpose model with the knowledge perspective of the specialized Meditron model. This approach allowed Co-LLM to tackle complex inquiries ranging from drug compositions to the mechanisms of diseases more accurately than a solitary model could. Through this method, users receive assured responses alongside prompts indicating potential areas requiring further verification.

To illustrate Co-LLM’s functional prowess, researchers have tested it against varied mathematical queries and health-related questions. In one instance involving a straightforward math problem, the model independently arrived at an incorrect answer. Yet, when holding dialogue with Llemma—an advanced math-specific LLM—the cooperative framework ultimately identified the correct solution.

Such capabilities extend into varied disciplines, showcasing the versatility of Co-LLM. The potential applications range from assisting medical professionals with data analysis to providing precise inputs that can help fine-tune enterprise-level documents—where accuracy is paramount. The adaptability of Co-LLM opens doors to train small systems that can collaborate with powerful models, ensuring accurate information flows are maintained, regardless of the constraints.

As the research continues to evolve, there are plans to enhance Co-LLM’s functionalities by integrating mechanisms for self-correction and improving feedback protocols. Researchers hope to create a system that not only deploys expert responses effectively but can also revert to previous stages, ensuring the integrity of the information is upheld.

Moving forward, the integration of current data will keep the algorithms up-to-date with the latest findings, allowing for continuous improvements. This adaptability could become a significant asset for industries requiring rapid decisions based on evolving information landscapes.

The innovations surrounding Co-LLM present a captivating glimpse into the future of AI and language processing. By mimicking human-like collaborative decision-making, this approach not only enhances efficiency but fundamentally changes our understanding of how models can evolve and interact to solve intricate problems. As we continue to explore this paradigm, the possibilities for AI-driven collaboration could dramatically reshape myriad sectors, pushing boundaries in knowledge-sharing and response accuracy.

Leave a Reply