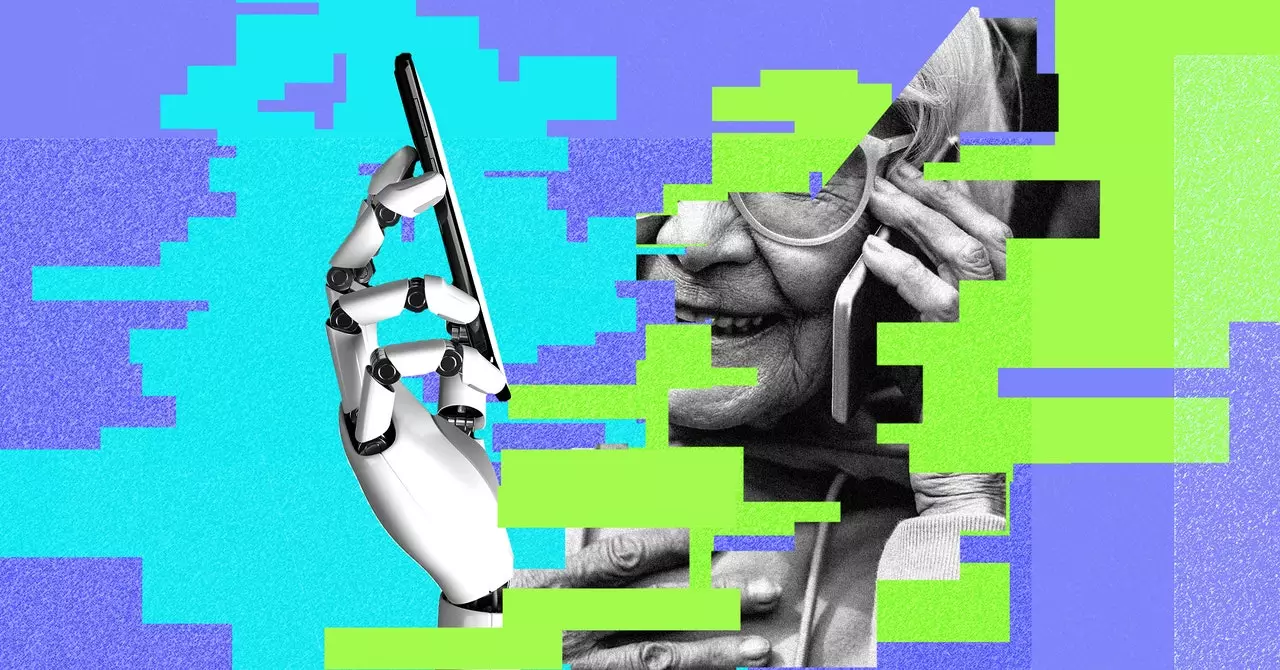

In today’s digital age, scammers are becoming more sophisticated in their methods of deception. One of the latest trends is AI voice cloning, where artificial intelligence is used to create convincing audio of people’s voices. This technology allows scammers to mimic the voice of a loved one or a trusted individual in order to manipulate victims into sending money or sharing personal information.

Rise of AI Voice Cloning

AI voice cloning is on the rise, with advancements in technology making it easier for scammers to create realistic audio recordings. These AI tools are trained on existing audio clips of human speech and can imitate almost anyone’s voice. Companies like OpenAI have even developed text-to-speech models that further enhance voice cloning capabilities, making it more accessible to fraudsters.

Scammers use AI voice cloning to create fake emergencies, such as a loved one being in a car accident or needing urgent financial assistance. They reach out via phone calls, using the cloned voice to manipulate victims into taking immediate action. In some cases, scammers may spoof legitimate phone numbers to make the calls appear more convincing.

Protecting Yourself

In the face of these AI voice cloning scams, it’s important to stay vigilant and protect yourself from falling victim to these deceptive tactics. Here are some expert tips to help you stay safe:

If you receive an unexpected call asking for money or personal information, take a moment to verify the caller’s identity. Ask to call them back using a verified number or communicate through a different channel, such as email or video chat. Establishing a safe word with your loved ones can also help confirm their identity in distress situations.

To confirm the authenticity of a distressing call, ask personal questions that only a genuine loved one would know the answer to. For example, inquire about shared memories or specific details that a scammer wouldn’t be able to replicate. This can help you differentiate between a real call and an AI voice cloning scam.

Be Wary of Emotional Manipulation

Experienced scammers are adept at manipulating emotions and creating a sense of urgency to pressure victims into acting quickly. Be cautious of any communication that elicits heightened emotions and take a moment to reflect before making any hasty decisions. By staying calm and rational, you can avoid falling prey to scammers’ tactics.

AI voice cloning scams are a growing threat in today’s digital landscape, but by following these expert tips and staying alert, you can protect yourself from falling victim to deception. Take the time to verify caller identities, ask personal questions, and guard against emotional manipulation to safeguard your financial and personal information. Stay informed and stay safe in the face of evolving technological advancements in fraudulent activities.

Leave a Reply