In the rapidly evolving landscape of artificial intelligence, the advent of cutting-edge embedding models signifies a new era of computational understanding. Google’s launch of the Gemini Embedding model—now officially available and dominating benchmarks—marks a pivotal moment. This model isn’t just another tool; it embodies a leap toward more nuanced, efficient, and versatile AI applications. As an advocate for innovation, I see Gemini as a testament to Google’s strategic vision, positioning itself as a leader in the quest for machines that grasp the subtleties of human language and beyond.

While the initial excitement around Gemini’s top ranking on the Massive Text Embedding Benchmark (MTEB) is justified, it’s vital to scrutinize the broader, highly competitive arena it enters. The landscape is cluttered with formidable open-source contenders and specialized models, each vying for dominance. What truly sets Gemini apart isn’t just its performance but its impact on enterprise AI deployment. Will it redefine industry standards or be superseded by more tailored, nimble open-source solutions? The answer lies in understanding the capabilities, restrictiveness, and strategic implications of adopting such a model.

Transforming Data into Insight: The Core Power of Embeddings

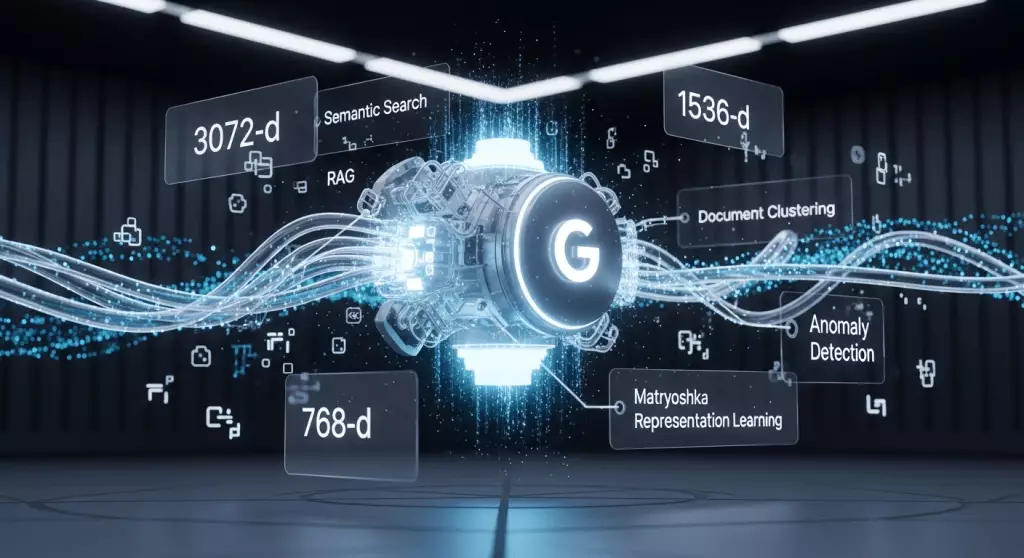

At their essence, embedding models convert complex data—be it text, images, or multimedia—into a structured numerical space. This transformation allows AI systems to discern subtle semantic differences and similarities with unprecedented precision. In application, this means more intelligent search capabilities, context-aware retrieval, and seamless multimodal integration that surpass simple keyword matching. Instead of hunting for exact strings, AI systems can understand intent, nuance, and contextual relevance—something that resonates deeply in an era where user experience and accuracy are paramount.

From a practical standpoint, industries like finance, legal, and healthcare stand to reap immense benefits. Embeddings enable organizations to classify documents, conduct sentiment analysis, or detect anomalies with heightened efficiency. Moreover, advanced embedding models like Gemini, with their flexible, high-dimensional outputs, empower developers to fine-tune these representations based on resource availability and performance needs. Such versatility marks a significant evolution from earlier rigid models, allowing enterprises to optimize cost and speed without sacrificing accuracy.

Google’s Strategy: A Balancing Act of Power and Accessibility

Google’s decision to embed Gemini into its Vertex AI platform and make it a core API service signals a clear strategic move. It democratizes access to high-performance AI, enabling even smaller players to leverage powerful semantic understanding without the need for extensive customization. At a cost of just $0.15 per million tokens, it’s positioned as an accessible yet advanced solution—an impressive feat given its benchmark performance and multilingual support across over 100 languages.

However, this closed, API-only approach raises critical questions about control and sovereignty. Enterprises with strict data security requirements, such as those in financial or healthcare sectors, grapple with the limitations of relying solely on cloud-based APIs. This is where open-source alternatives such as Alibaba Qwen3 or Qodo-Embed-1 shine, offering the ability to run models locally, maintain data privacy, and customize solutions according to specific needs.

What’s more, the rising popularity of specialized models designed for particular domains like code retrieval or noisy data classification—such as Mistral or Cohere’s Embed 4—exposes a fundamental tension. While Gemini’s general-purpose prowess is unrivaled at the moment, niche models often outperform in their target tasks, challenging the notion of one-size-fits-all solutions. For enterprises, the decision becomes a strategic calculus: opt for top-tier generalist models with vast support or channel resources into domain-specific, adaptable open-source tools.

The Open-Source Challenge: Disrupting Proprietary Dominance

The technological landscape is witnessing a democratization wave led by open-source embedding models. Alibaba’s Qwen3-Embedding, licensed under Apache 2.0, exemplifies this shift with performance eerily close to Gemini. Its permissive license makes it an attractive alternative for businesses seeking flexibility and transparency—particularly where control over data and infrastructure is non-negotiable.

In parallel, domain-specific open models like Qodo-Embed-1 aim to outperform larger, general-purpose counterparts within specialized niches such as code retrieval. The appeal here isn’t just about cost savings or licensing freedom; it’s about tailored optimization. Companies that are invested in building bespoke AI pipelines may find these open models provide the necessary granularity, control, and privacy assurances that proprietary APIs simply cannot.

Furthermore, with organizations increasingly prioritizing on-premise solutions due to regulatory constraints, open-source models are not just competitors but essential components of a resilient, adaptable AI infrastructure. This trend significantly alters the traditional proprietary paradigm by empowering organizations to assemble their own AI stacks, leveraging leading models without being beholden to cloud provider restrictions.

Strategic Implications and the Road Ahead

The emergence of Google’s Gemini Embedding as a benchmark leader doesn’t just elevate AI performance; it prompts a fundamental reevaluation of enterprise strategies. Organizations must weigh the benefits of seamless integration and ease of use against the potential risks of vendor lock-in, data sovereignty issues, and limited customization. For some, adopting Gemini signifies immediate access to top-tier technology, but it may also mean surrendering control and flexibility.

Conversely, the open-source community offers a compelling alternative—an assurance of control, cost-efficiency, and the capacity to innovate rapidly. As these models mature and benchmarks continue to evolve, the competition for the best embedding solution will intensify. Enterprises that are strategic in their approach will likely adopt hybrid models—leveraging proprietary power when appropriate while maintaining open-source agility in sensitive or specialized use cases.

What becomes clear is that the future of embeddings, and consequently AI’s ability to truly understand and reason about data, hinges on a complex interplay of performance, control, and strategic flexibility. Google’s Gemini is undoubtedly a significant milestone, but it’s part of a broader movement towards democratized, customizable AI solutions. Navigating this landscape successfully will require enterprises to critically assess their own priorities, technical capabilities, and long-term visions—because in the realm of intelligent data representation, power must be balanced with strategic agility.

Leave a Reply