Recent advancements in artificial intelligence have underscored the revolutionary capabilities of large language models (LLMs) such as GPT-4, reshaping our interactions with technology. These models excel in a wide array of tasks including text generation, coding, and language translation, thanks to their fundamental mechanism: predicting what comes next in a sequence based on preceding inputs. However, an intriguing study has emerged that challenges our understanding of this predictive capability by highlighting an “Arrow of Time” phenomenon—suggesting that LLMs are significantly more adept at predicting future words than retracing steps to determine prior ones. This discovery opens new avenues for understanding the nuanced dynamics of language processing as well as the cognitive mechanisms of AI.

Understanding Predictive Asymmetry in Language Models

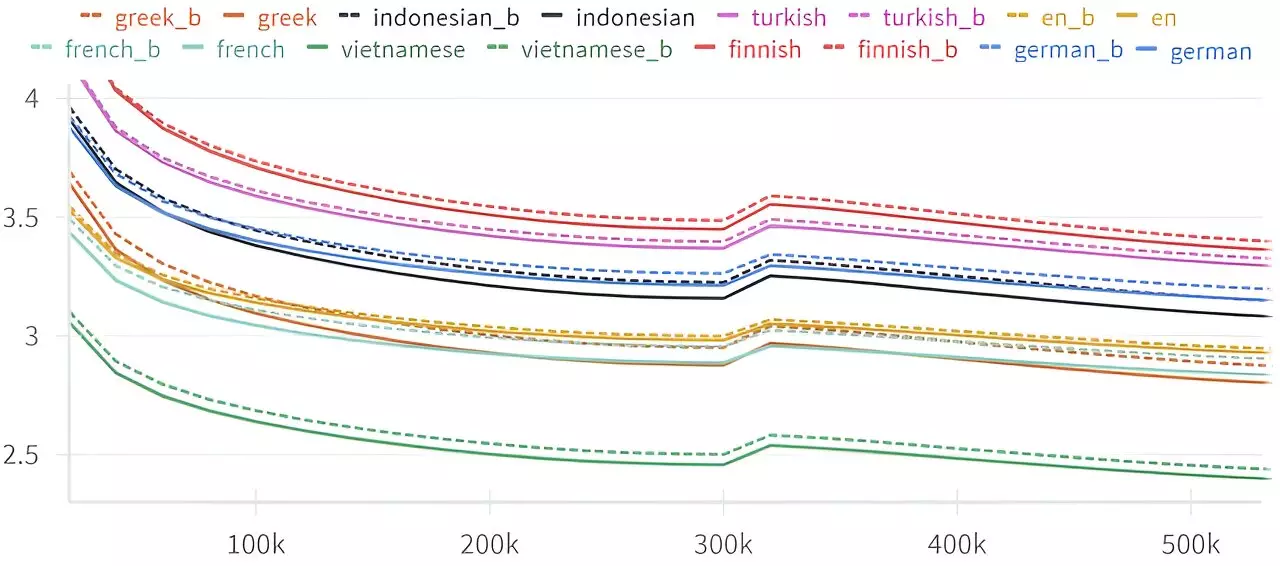

At the core of the researchers’ inquiry is the essential question of how language models handle the temporal structure of language. Ideally, LLMs should exhibit symmetrical predictive power; that is, predicting both forward and backward should present equal levels of challenge. Yet, the findings from a collaborative study involving Professor Clément Hongler, Jérémy Wenger, and Vassilis Papadopoulos demonstrates a consistent disparity: LLMs display a notable bias towards forward predictions. This “Arrow of Time” effect reveals that while models successfully compute upcoming words with high accuracy, their ability to infer preceding ones falters significantly. This performance gap has been observed across various architectures—including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM)—indicating a fundamental asymmetry embedded within the functioning of these models.

The research not only resonates with the technical operation of LLMs but also draws from the foundational theories established by Claude Shannon, the pioneer of information theory. In his 1951 exploration, Shannon posited that the predictive tasks of determining the next versus the previous unit in sequences should theoretically be equivalently challenging. Despite this, LLMs reveal a sensitivity to temporal directions. The implication of this discovery is profound, suggesting that the structure of language processing may reflect deeper properties of cognition that extend beyond mere computational methodologies.

Hongler notes, “While theoretically, there should be no difference, LLMs seem to inherently process language with a pronounced temporal awareness.” This indicates that linguistic structures may be designed with a directional flow, impacting not only technology but also our understanding of time and causality in both linguistic and broader cognitive contexts.

The Role of Intelligent Agency and Detection of Life

An especially fascinating aspect of this research links the temporal processing tendencies of LLMs to the identification of intelligent agents. The study poses an intriguing hypothesis: if linguistic symmetries reveal truths about intelligent processing, these could serve as indicators for identifying intelligence or even life in other contexts. This presents exciting prospects for future technologies that might leverage this temporal recognition to design even more advanced and capable LLMs that not only simulate conversations but also understand context and causality with increasing depth.

The study’s origins can be traced back to a creative collaboration with a theater school where researchers sought to enhance storytelling through AI. The experiment of training a chatbot to generate narratives based on known outcomes—such as concluding stories with “they lived happily ever after”—revealed early insights into the temporal asymmetry of LLMs. This initial endeavor illuminated not only the challenges inherent in reverse narrative construction but also the potential for developing highly engaging and responsive AI applications. The unexpected observation that LLMs exhibit diminished backward predictive accuracy led to contemplations of more profound phenomena encompassing language, knowledge acquisition, and even the fabric of reality itself.

Conclusion: Pathways for Future Exploration

The Arrow of Time effect illuminated by current research signifies a landmark in our understanding of both language processing in AI and broader philosophical issues regarding time. As LLMs continue to evolve, recognizing the significance of this predictive asymmetry may lead to enhanced technologies capable of understanding and modeling the complexities of human thought. This exciting territory not only has implications for linguistics and AI applications, but could also contribute to philosophical inquiries regarding the nature of intelligence and the unfolding of time itself. The future possibilities are vast, with the potential to bridge gaps in existing knowledge and unravel deeper connections within the narrative structure of human language.

Leave a Reply