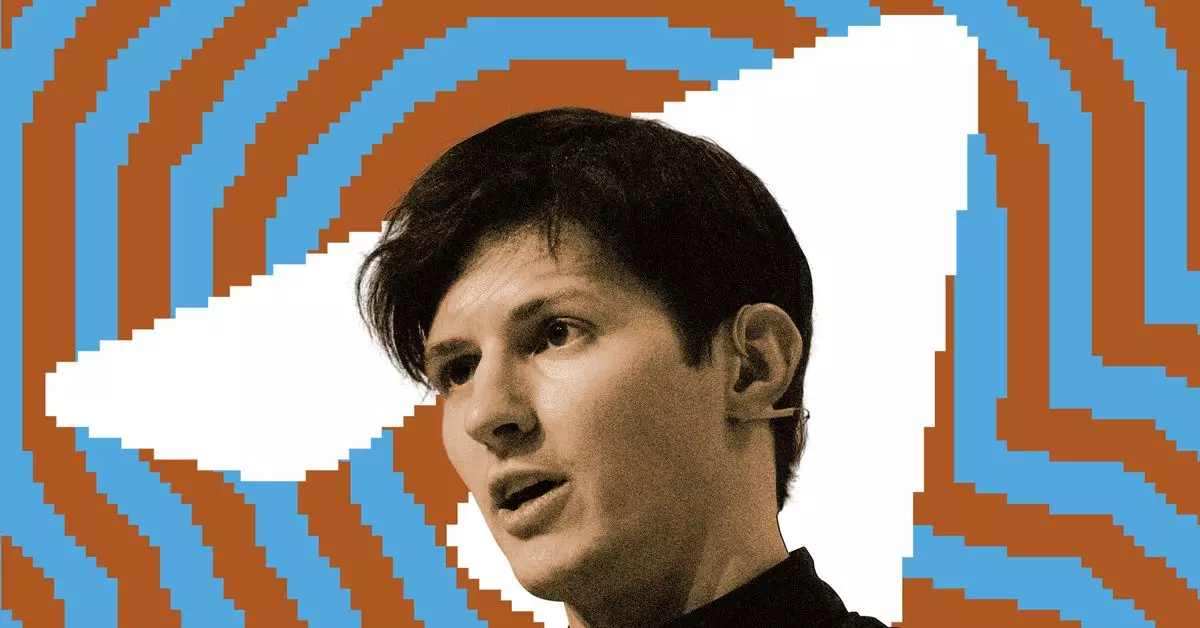

In a surprising turn of events, Telegram has made significant changes to its moderation policies, particularly in relation to private chats. The company recently removed language from its FAQ page that previously stated private chats were protected from moderation requests. This shift in policy comes on the heels of CEO Pavel Durov’s arrest in France for allegedly allowing criminal activity to flourish on the platform.

Despite initially proclaiming that he had “nothing to hide,” Durov has since changed his stance. In a public statement following his arrest, he acknowledged the platform’s shortcomings, attributing them to the rapid increase in user count. Durov expressed a commitment to improving moderation efforts and ensuring a safer online environment. This change in tone reflects a shift towards greater accountability on the part of Telegram.

Policy Adjustments in Action

One tangible manifestation of Telegram’s revised approach is the alteration of its FAQ page. Previously, the page stated that the platform did not process any requests related to private chats. However, the updated version now includes information on how users can report illegal content through the app’s “Report” buttons. This change signifies a more proactive stance on moderating harmful content on the platform.

Durov’s arrest in France was prompted by allegations of Telegram being used for distributing illicit material, including child sexual abuse content and drug trafficking. French authorities have criticized the company for its lack of cooperation in investigations. Despite serving as a vital source of information on events like Russia’s war in Ukraine, Telegram’s lax approach to content moderation has drawn scrutiny and legal repercussions.

The Path Forward

As Telegram grapples with these challenges, it is imperative for the company to prioritize user safety and ethical practices. Durov’s acknowledgment of the platform’s vulnerabilities and his commitment to improving moderation processes are positive steps in the right direction. By actively engaging with stakeholders and implementing robust moderation tools, Telegram can enhance trust and ensure a more secure platform for its users.

Telegram’s recent policy changes and the internal reevaluation of moderation practices signal a pivotal moment for the platform. By addressing the shortcomings in its current approach and striving towards greater transparency and accountability, Telegram can uphold its commitment to fostering a safe online environment for users worldwide.

Leave a Reply