Imagine standing in a grocery store, meticulously choosing the freshest apples while questioning if there could be an app to aid in your decision. This scenario is becoming increasingly feasible as technology evolves, particularly within the realms of machine learning and artificial intelligence. A recent study from the Arkansas Agricultural Experiment Station, spearheaded by Dongyi Wang, offers groundbreaking insights into how we can enhance the way we assess food quality using technology. Although machine-learning models currently struggle to match human adaptability in assessing food quality, this study suggests a path forward that could soon empower consumers and retailers alike.

The Challenges of Machine Learning in Food Quality Assessment

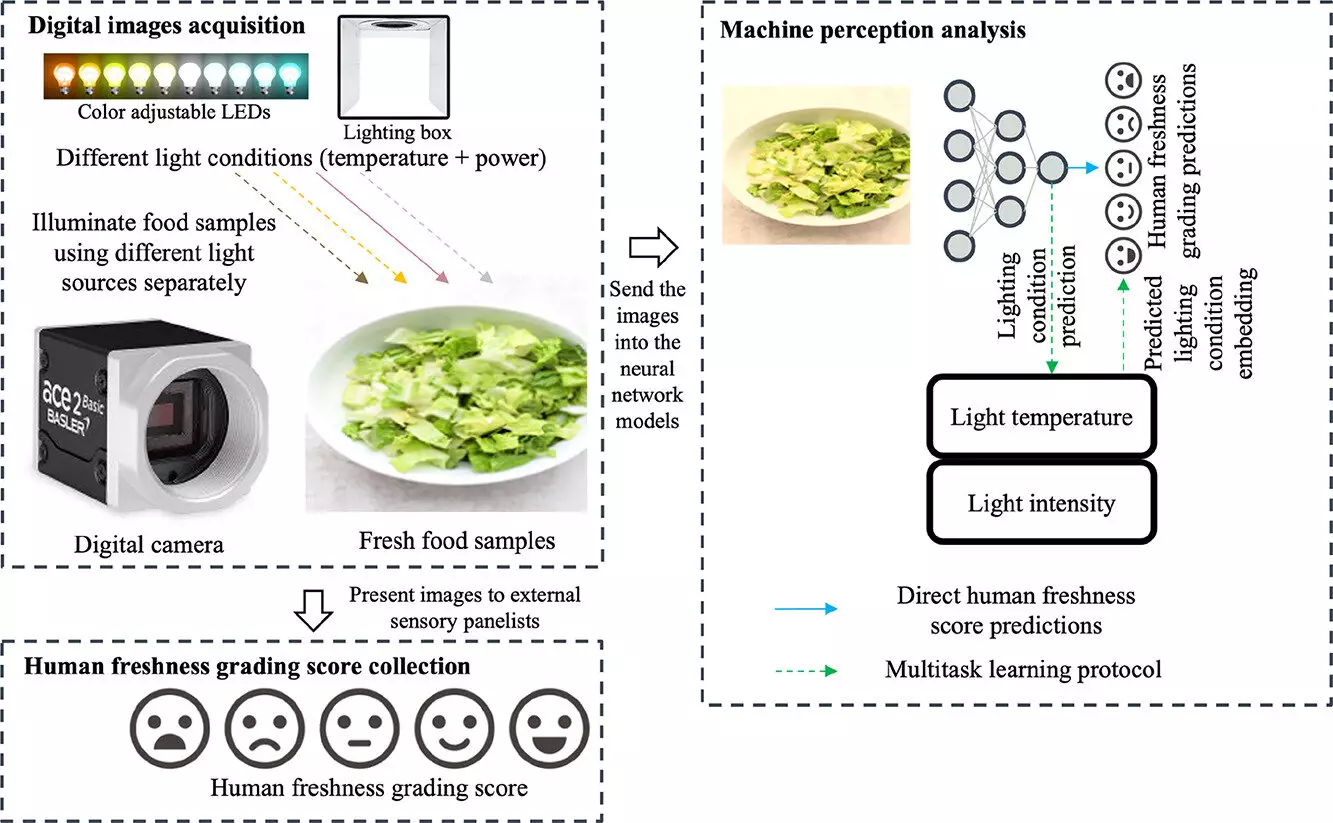

Machine learning has been hailed as a revolutionary tool for various industries, yet its application in food quality assessment faces hurdles. Current algorithms often fail to account for the variability in human perception driven by environmental factors, particularly lighting. Wang’s research demonstrates that existing models solely reliant on human-labeled data or basic color metrics are inadequate. They lack the sophistication to address the nuanced ways that lighting can affect human judgment—something that simply cannot be ignored when considering food appearance and freshness. The study highlights a critical gap in the traditional training of machine learning systems, a gap that Wang and his team are striving to fill.

The research involved an extensive sensory evaluation of Romaine lettuce. With 109 participants spanning a wide age range, every individual was assessed to ensure they did not have color blindness or vision impairments. Over five days, the participants examined 75 photographs of lettuce each day, rating their freshness on a scale from zero to 100. This rigorous methodology led to a substantial dataset of 675 images captured under varying lighting conditions, from cool blue tones to warm orangey hues. By incorporating these differing lighting scenarios, the study sought to mimic real-world conditions more accurately, ensuring that the resultant machine learning models could react to human perceptions of quality under various circumstances.

Refining Machine Learning Models Through Human Insight

The standout finding of this research is that by integrating human perceptual data into the training of machine learning models, prediction errors in food quality assessments decreased by a remarkable 20%. This significant improvement serves as a reminder of the potential of collaboration between human intuition and computational precision. Machine learning devoid of an understanding of human perception would remain limited in its effectiveness, highlighting a crucial aspect of tech development: it must always align with the intricacies of human behavior and sensory evaluation.

While the study focused on Romaine lettuce, the methods developed could have far-reaching applications in various industries. Wang suggests that the principles of leveraging human perception analytics could easily extend to the evaluation of other perishable goods and even non-food items such as jewelry. By understanding how our perceptions can be manipulated and measured, industries could develop tools that enhance product presentation, ensuring that consumers are drawn to the quality and freshness they seek.

The potential to integrate machine learning with sensory evaluations raises intriguing questions about consumer experiences in retail environments. Imagine a future where your grocery store app not only identifies the freshest fruits but also suggests the optimal lighting conditions under which to view those items for the best visual appeal. The study’s findings promise to evolve how grocery stores present their products and open up avenues for personalized shopping experiences driven by nuanced technology.

As we reflect on the findings from this Arkansas-based study, it becomes evident that the synergy between human perception and machine learning is not merely a futuristic dream but an attainable reality. By enhancing our understanding of how environmental factors like lighting can bias human assessments, researchers like Wang are paving the way for an era where technology complements human instincts rather than replacing them. As machine learning continues to evolve in the field of food quality assessment, the implications could transform both consumer experiences and industry standards, ensuring that we enjoy not only the taste of quality but the sight of it as well.

Leave a Reply