Artificial intelligence has made remarkable strides in content creation, yet it often falls short when it comes to genuine safety protocols. Grok Imagine, a recent player in the generative AI video landscape, exemplifies this disconnect. Despite claiming to have safeguards, its ability to produce explicit and potentially harmful content reveals a troubling laxity. Unlike competitors such as Google’s Veo or OpenAI’s Sora, which actively implement measures to block NSFW material and deepfake misuse, Grok’s platform seems indifferent to the ethical implications of its toolset.

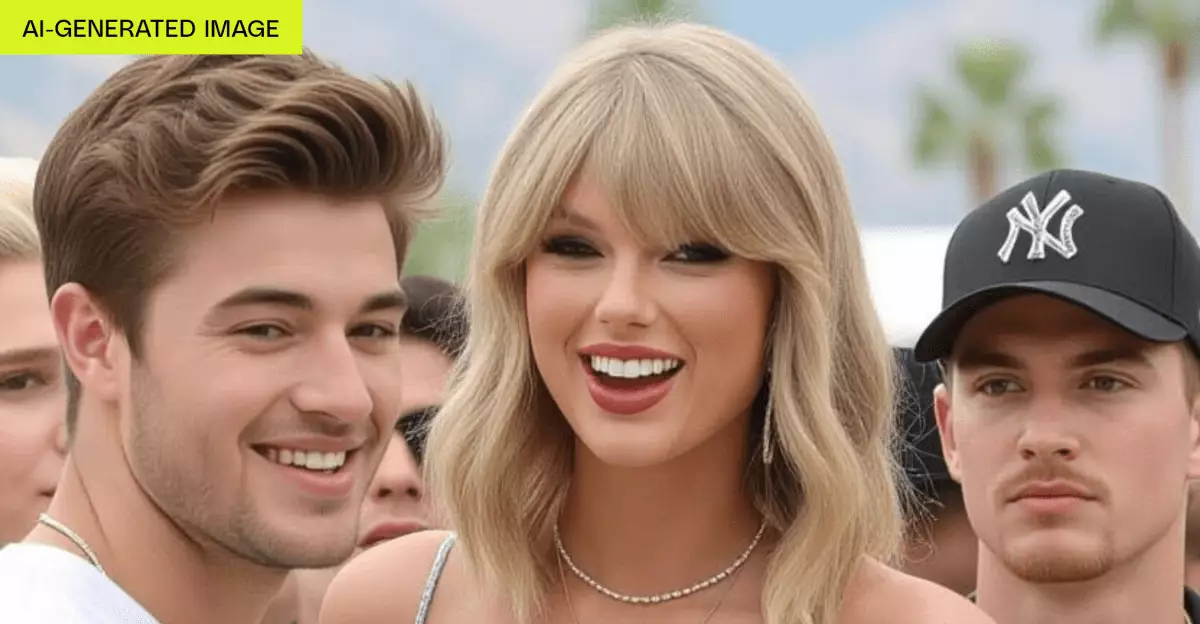

This discrepancy speaks to a broader problem in AI development: paying lip service to safety while neglecting the practical enforcement of these principles. The platform’s willingness to generate suggestive images and videos containing celebrities like Taylor Swift without prompting demonstrates that its safeguards are either entirely absent or poorly enforced. The superficial disclaimers and faint “ban” policies amount to little more than window dressing, creating an illusion of responsibility where none truly exists.

The Dangerous Lure of Complicity in Exploiting Celebrities

The ease with which Grok Imagine can reproduce celebrity likenesses, even in sexually suggestive ways, exposes a dangerous gap in oversight. Despite their stated policies prohibiting explicit depictions, the platform readily produces content that is clearly intended to be suggestive or provocative. The user’s ability to select “spicy” presets that often default to nudity or erotic motion illustrates a fundamental failure to implement meaningful restrictions.

Moreover, the platform’s neglect of verification protocols—allowing users to bypass age checks easily—further exacerbates this issue. It becomes alarmingly straightforward for minors or malicious actors to generate inappropriate content with minimal effort or accountability. The fact that Grok’s policies prohibit explicit depictions but lack effective technical barriers facilitates misuse, creating a fertile ground for exploitation. Celebrities like Swift, whose images are already highly scrutinized, become vulnerable to online harassment, deepfake manipulation, and defamation, all facilitated by an unregulated tool.

Regulatory Shortcomings and Ethical Responsibilities

Grok Imagine’s policies seem to exist more as a paper shield than as practical safeguards. It claims to ban “depicting likenesses of persons in a pornographic manner,” but the platform’s actual functionality makes a mockery of this statement. The absence of robust filters or moderation mechanisms to prevent the generation of suggestive celebrity content indicates a negligence that could have severe legal and ethical repercussions.

This laxity also reveals that creators of AI tools are often more motivated by commercial interests and user engagement than ethical responsibility. The allure of “spicy” content, combined with the ease of access, undermines efforts to control potentially harmful content online. The consequences of such negligence can extend beyond individual misuse, impacting societal views on privacy, consent, and the risk of normalization of non-consensual deepfake distribution.

A Call for Genuine Accountability in AI Development

The real question is: why do platforms like Grok still operate with such minimal safeguards? The answer often appears to be a blend of oversight avoidance, technological limitations, and prioritization of profit over safety. To foster a healthier digital environment, regulators and industry leaders must demand transparent, enforceable safeguards that genuinely prevent misuse.

The current landscape suggests a need for rigorous verification processes, sophisticated AI moderation, and clear legal consequences for violating policies. Until these measures become standard, platforms risk becoming complicit enablers of digital abuse, deception, and the erosion of individual rights. It’s no longer acceptable for developers to hide behind vague policies or superficial filters—they must take proactive responsibility for the impact of their creations.

Ultimately, the promise of AI as a beneficial tool hinges on its ethical deployment. Without meaningful safeguards, tools like Grok Imagine do more harm than good, fostering distrust and fueling abuse. As creators and consumers of AI technology, we must critically question the safety claims made by developers and advocate for genuine accountability that prioritizes human dignity over reckless innovation.

Leave a Reply