Amid significant technological advancements in artificial intelligence, the field of robotics appears to be lagging, revealing a stark dichotomy between AI capabilities and robotic functionality. Modern robots prevalent in industrial settings, such as factories and warehouses, exhibit a strong adherence to programmed routines. Their operational methodologies are rigid, often lacking situational awareness or the ability to respond to unexpected changes in their environments. This narrowly defined functionality limits their potential applications in more complex, varied industrial tasks where adaptability is key.

While some industrial robots possess limited object recognition and manipulation skills, their dexterity remains minimal, hampering their effectiveness when it comes to generalized physical intelligence. This limitation is particularly evident when compared to the vast variety of tasks that potentially more intelligent robots could accomplish with just basic initial instructions. The disparity illustrates a fundamental hurdle in adapting robots for use in dynamic settings, not just within factory walls but also in everyday human environments, which are notoriously unpredictable and cluttered.

The Vision for Advanced Robotics

Despite these challenges, there exists a burgeoning optimism surrounding the future of robotics, fueled by advances in AI. High-profile tech leaders, such as Elon Musk, are propelling this narrative forward, exemplified by Tesla’s ambitious project to develop a humanoid robot named Optimus. Musk’s claim that such robots could be produced for a market price of $20,000 to $25,000 by 2040 opens the door to a potential transformation in how robots are integrated into daily life.

However, optimism must be tempered with realism. Previously, attempts to train robots for complex tasks tended to focus on individual machines learning in isolation, with little crossover potential. Newer academic studies are beginning to unveil the potential for collective learning, whereby knowledge can be shared among various machines tackling different tasks. A recent collaborative venture, Open X-Embodiment, showcased this innovative approach, enabling 22 robots across 21 research facilities to learn from one another, thus broadening their operational scope.

Challenges Ahead

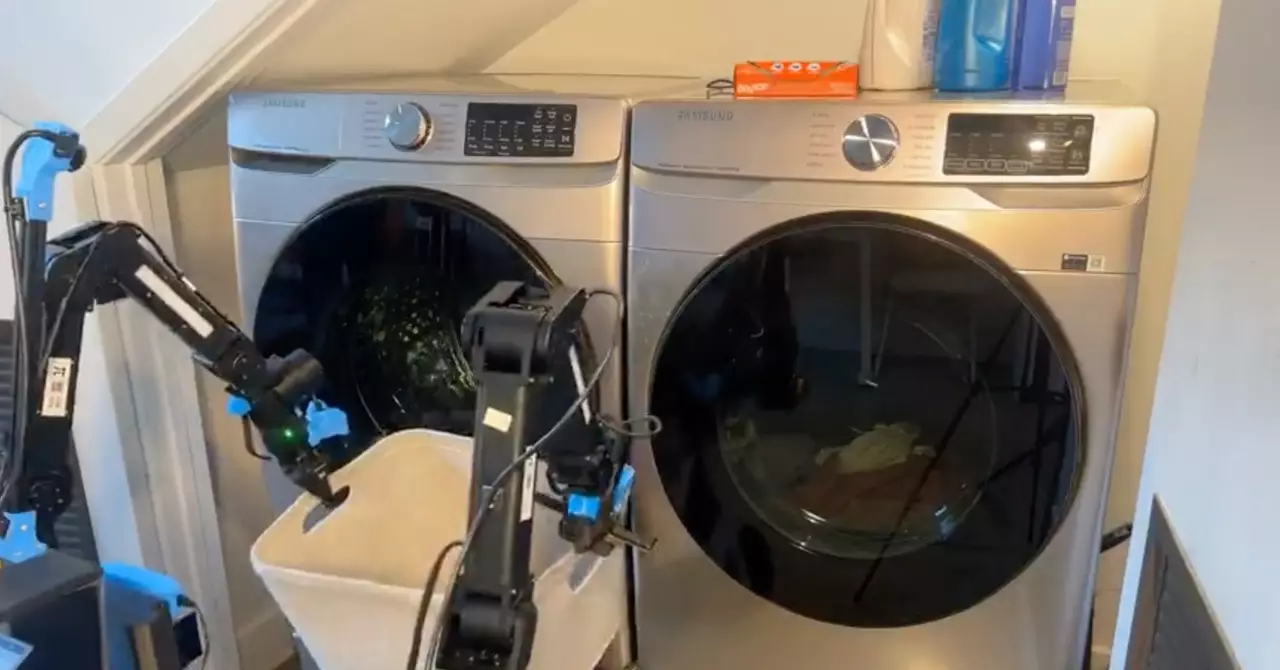

Nonetheless, challenges persist. The data ecosystem for training robots falls short when compared to the vast amounts of textual information driving large language models. As a result, companies like Physical Intelligence find themselves in a position where they must generate their own data sets while simultaneously developing innovative methods for effective learning with limited resources. Their cutting-edge technique, which merges vision-language models with generative diffusion modeling, aims to facilitate a more nuanced understanding of tasks but underscores the need for significant scaling of data-driven learning mechanisms.

To fully realize the dream where robots can seamlessly accept and execute a range of tasks similar to humans, the landscape of robotic training must evolve. The concepts being explored today could serve as foundational scaffolding for the robots of tomorrow, yet it is crucial to acknowledge that while the potential for advancement exists, we still face a long journey ahead. As noted by Levine, the future is tentative but promising, revealing a glimpse of what advanced robotics might look like if foundational hurdles can be surmounted.

Leave a Reply