In the ever-evolving landscape of artificial intelligence, Chinese startup DeepSeek continues to assert its position as a formidable challenger to conventional AI vendors. The firm, which emerged from the innovative environment of High-Flyer Capital Management, has made headlines with the release of its latest ultra-large model: DeepSeek-V3. This model not only represents a technical feat but also serves as a critical step toward democratizing AI technology and bringing us closer to the anticipated frontier of artificial general intelligence (AGI).

DeepSeek has adopted an open-source approach, a strategic choice that diverges sharply from the secretive and closed-source practices of major competitors such as OpenAI and Anthropic. By making its AI tools freely accessible and modifiable, DeepSeek not only promotes greater collaboration within the AI community but also empowers developers and enterprises to experiment with cutting-edge AI models. This progressive mindset deserves to be celebrated as it strives to ensure that AI technology benefits a broader spectrum of innovators.

The core architectural features of DeepSeek-V3 are undeniably impressive. Boasting 671 billion parameters, the model employs a mixture-of-experts (MoE) architecture. This structure strategically activates only a subset of these parameters—37 billion per token—thereby enabling the model to perform specific tasks with both speed and efficiency. Such a design ensures that the operational costs associated with running this model are significantly reduced, a crucial aspect for organizations looking to integrate advanced AI capabilities without incurring prohibitive expenses.

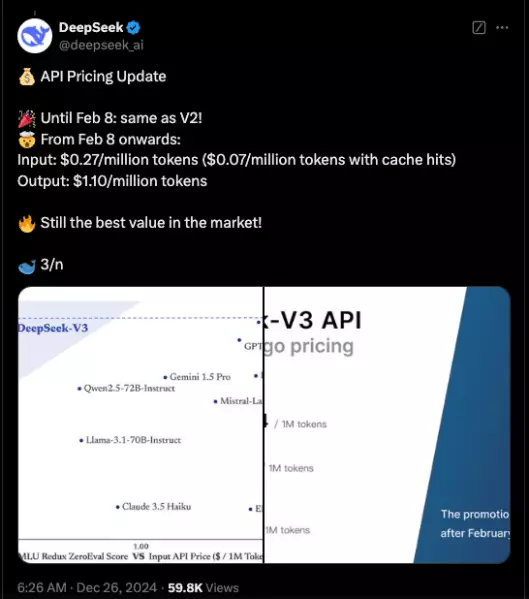

DeepSeek’s benchmarks reveal a stark performance superiority of DeepSeek-V3 over other established models, including Meta’s Llama 3.1-405B and even some closed-source solutions. Performance comparisons particularly highlight its strength in mathematics and the Chinese language, areas where many existing models struggle. This claimed capability reflects not only the model’s adept handling of various tasks but also presents a tangible shift towards more balanced competition in the field of AI.

To enhance the functionality of its new model, DeepSeek incorporates groundbreaking strategies like the auxiliary loss-free load-balancing mechanism and multi-token prediction (MTP). These innovations are illustrative of DeepSeek’s commitment to pushing boundaries; the load-balancing strategy promotes optimized use of the underlying neural networks, thereby enhancing overall model performance without sacrificing efficiency. Meanwhile, MTP allows for simultaneous prediction of tokens, tripling the model’s output capacity and allowing it to generate 60 tokens per second.

Such advances not only increase training efficiency but significantly reduce computational costs as well. For instance, the entire training process of DeepSeek-V3 spanned approximately 2.8 million GPU hours, solidifying its position as an economically viable option compared to the vast financial outlays associated with training models from its competitors. This notable cost-effectiveness—about $5.57 million—has raised questions about the future methodology of AI research and development.

Performance Benchmarks: A Serious Contender

While DeepSeek-V3 has demonstrated significant advantages in specific aspects, it’s important to recognize the competition it faces. Benchmarks have indicated that it surpasses many open-source models and even rivals like Anthropic’s Claude 3.5 Sonnet in certain evaluations. However, no model seems invincible; DeepSeek-V3 exhibits some weaknesses in English-focused assessments, where OpenAI’s offerings sometimes outperform it.

Nonetheless, where DeepSeek-V3 truly shines is in its specific benchmarks, particularly in mathematics, evidenced by its remarkable score of 90.2 in the Math-500 test. Such results position the model as a leader in certain niches, contributing to the broader narrative of how open-source models are beginning to bridge the performance gap with their closed-source counterparts.

The significant developments heralded by the arrival of DeepSeek-V3 suggest a promising trajectory for the future of AI, particularly within the open-source arena. As industry leaders become more receptive to the quality of open-source solutions, organizations can prepare for an environment defined by competition and choice. The increasing capabilities of models like DeepSeek-V3 signal a shift, where AI can no longer be the purview of the few powerful companies; instead, the potential for innovation spreads across a diverse ecosystem of contributors.

As enterprises explore the new possibilities offered by DeepSeek-V3, the implications for the market are profound. With packages available under accessible terms and generous provisions for developers, the opportunity to experiment with advanced AI is at an all-time high. Given the availability of tools through platforms like GitHub and commercial APIs, the stage is set for a spirited expansion in AI capabilities underpinned by open-source principles.

DeepSeek-V3 undoubtedly marks a significant milestone in the evolution of AI technologies. It exemplifies the potential for open-source platforms to challenge the status quo and highlights the vibrancy of innovation that emerges when boundaries are lowered. As AI evolves, DeepSeek’s ambitious projects may not only reshape our understanding of what is possible with these technologies but also help pave the way for equitable access to the tools that drive progress in the field. The race towards AGI is a collective effort, and with models like DeepSeek-V3, the road seems clearer than ever.

Leave a Reply