In an era dominated by technology, the rise of social media platforms has undeniably transformed how teenagers connect, learn, and express themselves. Yet, these digital spaces are rife with risks—ranging from cyberbullying and exploitation to deceptive scams. Recognizing these perils, Meta, the parent company of Instagram, has embarked on a mission to fortify its youthful user base with innovative safety measures. While their initiatives are undeniably well-intentioned, it’s imperative to scrutinize the effectiveness and sincerity of these efforts critically. Are they enough to shield adolescents from the multifaceted dangers lurking online, or are they more about image management and regulatory appeasement?

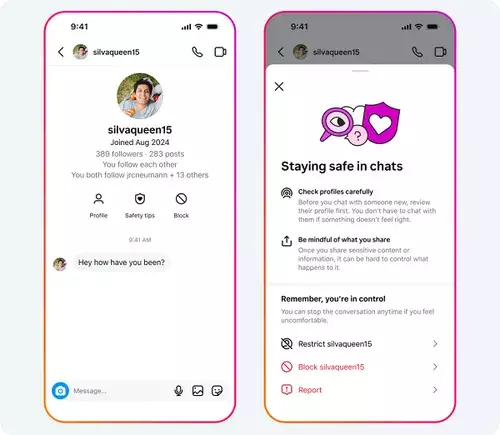

Meta’s recent rollouts, such as integrated safety prompts and streamlined blocking mechanisms, signal an understanding that protecting young users requires usability alongside security. The addition of real-time “Safety Tips” within chat interfaces aims to educate teens about spotting scams and navigating interactions with strangers. Though seemingly helpful, this approach might be more superficial than substantive—knowledge alone seldom shifts behavior, especially when young users are often unaware of how sophisticated and persistent online threats can be.

Introducing quick-access blocking features and contextual account information certainly enhances user control, but the true question remains: Do these tools empower teenagers to make safer choices autonomously, or do they merely serve as reactive barriers after harm has occurred? Meta’s move to combine blocking and reporting into a single action simplifies the user experience but raises concerns about whether such frictionless solutions might inadvertently encourage complacency. If reporting becomes too easy, it might reduce the perceived seriousness of online misconduct, blunting societal efforts to hold offenders accountable.

Addressing Predatory Behaviors and Exploitation

One of the most alarming issues highlighted by Meta’s disclosures is the presence of accounts involved in sexual exploitation of minors. The removal of thousands of accounts involved in soliciting inappropriate content underscores the persistent challenge digital platforms face in policing predatory behaviors. While this demonstrates Meta’s resolve to scrub harmful content, it also underscores the scale of the problem: hundreds of thousands of related accounts continue to exist, framing this as a systemic issue rather than a problem that can be easily eradicated.

The platform’s focus on removing explicitly offensive profiles is necessary, but it shouldn’t obscure the broader, less visible problem—silently, predators often operate through indirect means, manipulative messages, or encrypted spaces that are harder to police. Relying solely on reactive account removal risks playing catch-up rather than prevention. Much more proactive engagement—such as AI-driven monitoring that detects grooming behaviors before they escalate—would signal a genuinely serious approach. Reality check: platform responsibility must be paired with stronger legal and societal accountability, which remains sparse in the face of such threats.

Empowering Young Users Without Overreach

Meta’s efforts extend to limiting recommendations to teens, especially by cutting off interactions with suspicious or adult-linked accounts. Such policies aim to create an environment where vulnerability is minimized; the platform is actively attempting to create barriers that keep predators at bay. However, restricting content and connections can easily slide into paternalism if not balanced carefully with users’ rights to digital freedom and privacy. Teenagers are often resourceful and should be entrusted with tools that educate rather than shield them entirely.

Furthermore, measures like location notices, nudity filters, and message restrictions are positive steps, but are they enough? The statistics claiming high adoption rates of safety features—such as nudity protections that 99% of users keep active—are promising, however, the real metrics are about the quality of safety, not mere participation. How many teens continue to encounter harmful content, and how many hide or bypass filters? These questions reveal that safety cannot solely be about numbers; it must be about effectiveness and ongoing refinement.

Regulatory and Ethical Dimensions

Beyond internal safety features, Meta’s stance on raising the minimum age for social media access signals a recognition that regulatory frameworks are lagging behind technological realities. The proposed EU-wide “Digital Majority Age” aligns with broader societal concerns regarding minors’ exposure to potentially damaging online environments. From a strategic perspective, Meta’s support appears to be both ethical and pragmatic—by aligning itself with regulatory trends, it hopes to stave off stricter controls and protect its business interests.

Nonetheless, the question of enforcement looms large. Setting a higher age threshold is only effective if age verification mechanisms are rigorous; otherwise, underage users will find ways around restrictions, rendering the policy superficial. Ultimately, the goal should be a balanced approach—limiting access to vulnerable minors while respecting their rights and stages of development. Meta’s support for this initiative signifies a willingness to adapt, but genuine progress depends on transparent implementation and cooperation with regulators.

—

While Meta’s recent safety initiatives are steps in the right direction, they often feel more like symbolic gestures than comprehensive solutions. The complexities of online safety require persistent innovation, legal accountability, and a cultural shift in how we view digital childhood. It’s easy to laud technological fixes, but true protection hinges on a collective effort that combines platform responsibility, parental engagement, legal enforcement, and societal awareness. Only then can we truly hope to create online spaces where teenagers are not just safer, but empowered to navigate their digital worlds with confidence.

Leave a Reply