In a world entrenched in technological advancements, the current fascination with expansive artificial intelligence capabilities has paved the way for large language models (LLMs) to dominate the scene. Companies like OpenAI, Google, and Meta have invested colossal amounts of computational power into models with hundreds of billions of parameters. These parameters serve as the intricate gears that adjust and optimize the patterns recognized in language processing, beefing up the AI’s performance and accuracy. However, this quest for ever-greater capability comes with its own set of challenges—namely, exorbitant training costs and immense energy consumption. Google’s Gemini 1.0 Ultra model alone is said to have set the company back a staggering $191 million. It becomes evident that the future of AI might not be in amplifying size but rather in rethinking scale.

The Environmental Cost of Power

The energy demands associated with large models have produced obvious ecological considerations. The Electric Power Research Institute’s figures reveal that querying a model like ChatGPT can burn through about ten times the energy equivalent of a single Google search. As awareness grows regarding the environmental impact of tech, these colossal models increasingly become a subject of scrutiny. Widespread energy consumption not only threatens profitability but also poses ethical dilemmas surrounding sustainability. Therefore, the search for alternatives that embrace efficiency without sacrificing quality is not merely academic; it’s imperative for the future of responsible AI.

Embracing Small Language Models

Enter the realm of small language models (SLMs), a refreshing departure from their larger counterparts. Capable of packing tremendous value into just a few billion parameters, SLMs are tailored for specificity rather than generalized tasks. They might not handle enormous conversational threads or complex image processing, but these models thrive in niche applications, such as providing instant responses in telehealth contexts or acting as vital components in smart-home technology. As Zico Kolter from Carnegie Mellon University puts it: “For a lot of tasks, an 8 billion–parameter model is actually pretty good.” This statement captures the essence of a growing sentiment in the AI community—that sometimes, smaller is indeed better.

A New Approach to Model Training

One of the most innovative aspects of developing small models involves leveraging the robust capabilities of LLMs. The concept of knowledge distillation allows larger models to serve as mentors, imparting their understanding to their smaller cousins through a process akin to teacher-student dynamics in a classroom. By utilizing cleaner, high-quality data generated by larger models, SLMs can maintain impressive performance despite their reduced size. This refinement in data curation stands as a game-changer, allowing SLMs to execute tasks with remarkable precision while sidestepping the pitfalls associated with messy training data.

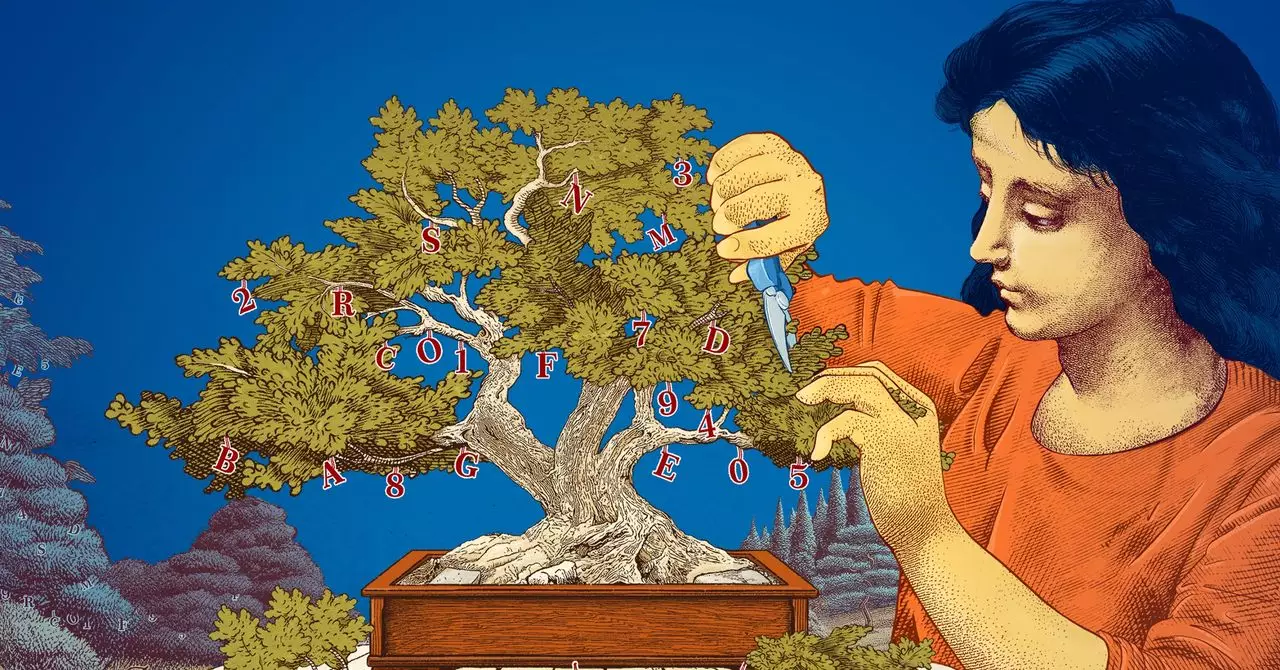

Cutting Edge Techniques: Pruning for Efficiency

Another pioneering avenue in the small model discourse is the practice of model pruning—cutting unnecessary baggage from neural networks to enhance efficiency. The term derives from a well-researched and long-standing concept summed up by Yann LeCun’s notion of “optimal brain damage.” This technique optimizes neural networks by shedding weights and connections that do not contribute meaningfully to performance. The result is a more agile model that can deliver results tailored to specific tasks without the overhead associated with larger models. This streamlining resonates with the broader goal of enhancing AI capabilities while minimizing resource expenditure.

Empowering Research and Creativity

Smaller models not only open doors for efficient applications; they also provide fertile ground for researchers eager to explore uncharted territory. The lower risk associated with experimenting on small models invites innovation, allowing scientists to test novel ideas without the financial burden associated with larger models. Leshem Choshen from the MIT-IBM Watson AI Lab notes that these smaller setups usher in a phase of experimentation where researchers can validate concepts with significantly reduced stakes. The transparency and interpretability that accompany models with fewer parameters further encourage rigorous exploration.

Defining the Future of AI: Simplicity vs. Capability

As the landscape of artificial intelligence evolves, the intersection of power, efficiency, and sustainability is increasingly defining the place of AI in society. While the hyper-parameterized models have profound capabilities—especially in generalized contexts like chatbots and advanced predictive analytics—SLMs represent a necessary counterbalance. The efficiency gained through targeted tasks not only conserves valuable resources but also democratizes access to machine learning capabilities. As researchers probe deeper into defining what constitutes ‘intelligence’ in machines, it becomes clear that the conversation isn’t simply about building larger frameworks but about making smarter, more efficient choices. Ultimately, as we unlock the potential of small language models, we stand on the precipice of a transformative era in AI development that prioritizes sustainability without sacrificing efficacy.

Leave a Reply