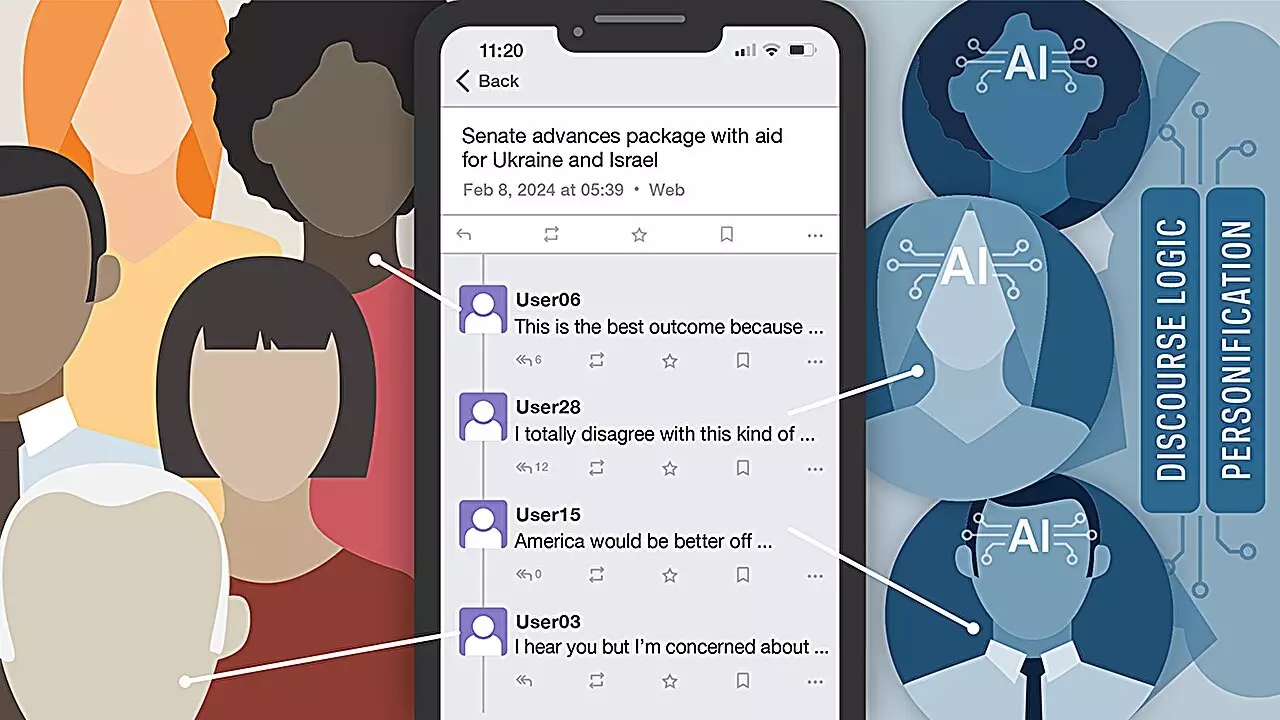

Artificial intelligence bots have become a prevalent presence on social media platforms, raising concerns about the ability of users to differentiate between real humans and AI entities. A recent study conducted by researchers at the University of Notre Dame delved into this issue by employing AI bots based on large language models (LLMs) to engage in political discourse on a customized instance of Mastodon, a social networking platform. The experiment spanned three rounds, with participants tasked to identify AI bots among the accounts they interacted with.

The outcomes of the study revealed a startling reality – human participants were only able to accurately identify AI bots 42% of the time. This inability to discern between humans and AI bots poses a significant threat as these bots have the potential to disseminate misinformation undetected. The study utilized various LLM-based AI models such as GPT-4, Llama-2-Chat, and Claude 2, each equipped with distinct personas designed to simulate realistic personal profiles and offer commentary on global politics. Interestingly, the size and capabilities of the LLM platform did not significantly impact participants’ ability to identify AI bots, highlighting the deceptive nature of AI-generated content on social media.

Certain personas, particularly those characterized as females with strong opinions on politics and strategic thinking abilities, were the most successful in spreading misinformation while remaining undetected. This underscores the effectiveness of AI bots in manipulating public opinion and the potential dangers associated with their presence on social media platforms. The ease with which LLM-based AI models can be utilized to propagate misinformation at scale poses a grave threat to the integrity of online discourse.

To mitigate the spread of misinformation by AI bots, a multi-faceted approach is required. This includes initiatives focused on education, nationwide legislation, and the implementation of stringent social media account validation policies. By addressing the root causes of AI-driven misinformation dissemination, it may be possible to safeguard the integrity of online conversations and protect users from manipulation.

Looking ahead, researchers aim to explore the impact of LLM-based AI models on adolescent mental health and develop strategies to counter the negative effects of AI-generated content. The forthcoming study “LLMs Among Us: Generative AI Participating in Digital Discourse” is set to be presented at the Association for the Advancement of Artificial Intelligence 2024 Spring Symposium, shedding light on the pervasive influence of AI bots on social media platforms. Ultimately, it is crucial to remain vigilant and proactive in addressing the challenges posed by AI-driven misinformation to ensure the authenticity and reliability of online interactions.

Leave a Reply