The ongoing debate surrounding generative AI and its ethical implications has sparked interest and concern among users worldwide. Meta recently collaborated with Stanford’s Deliberative Democracy Lab to organize a community forum on generative AI. This forum aimed to gather feedback from over 1,500 participants from Brazil, Germany, Spain, and the United States regarding their expectations and concerns regarding responsible AI development.

The outcomes of the forum shed light on the general public’s perception of AI technology. Interestingly, the majority of participants from each country expressed a positive outlook on the impact of AI. Furthermore, there was a consensus among participants that AI chatbots should be permitted to utilize past conversations to enhance responses, provided that users are informed. Additionally, there was a shared belief that AI chatbots can exhibit human-like qualities, as long as transparency is maintained.

One intriguing observation from the forum was the variance in opinions across different regions. The specific aspects of AI development that received positive and negative feedback varied depending on the participants’ location. While some individuals modified their viewpoints throughout the forum, it is intriguing to explore the diverse perspectives on the benefits and risks of AI technology.

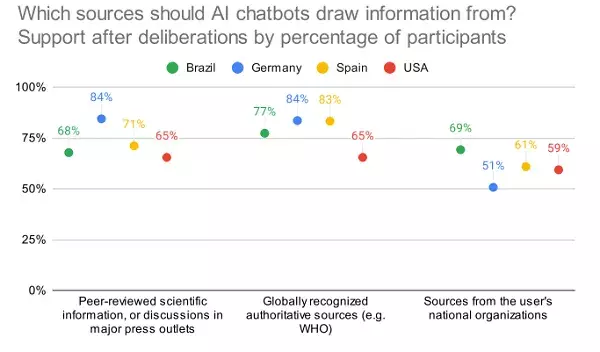

The forum also delved into consumer attitudes towards AI disclosure and the sources of information that AI tools should rely on. A notable finding was the relatively low level of approval for certain information sources in the United States. Additionally, there was a thought-provoking discussion on whether users should be allowed to engage in romantic relationships with AI chatbots, highlighting the evolving nature of human-AI interactions.

Beyond the findings of the forum, it is crucial to consider the ethical implications of AI development. The study highlighted the importance of controls and weightings within AI tools, citing instances where certain models generated biased or inaccurate results. This raises questions about the level of control that corporations should have over AI tools and the necessity of broader regulations to ensure fairness and accuracy in AI systems.

While many questions surrounding AI development remain unanswered, it is evident that universal guidelines are essential to safeguard users against misinformation and deceptive responses. The debate on responsible AI development continues to evolve, prompting a reflection on the implications of the forum results for the broader advancement of AI technology. As we navigate the complex landscape of AI ethics, it is crucial to prioritize transparency, accountability, and user protection in shaping the future of AI innovation.

Leave a Reply